Juniper Vmx Download Qcow2 Fax Modem For Voip? Daftar Tujuan Kapal Laut Pelni Dan Jadwal Keberangkatan 2019 Sharing Switch Kvm A Cosa Serve. If you need some of the missing features from Olive (or a more updated version of Junos), vMX is a good solution (but you'll need an hypervisor to install it). Otherwise Olive should be good enough.

- Junos Vmx Qcow2 Download Windows 10

- Junos Vmx Qcow2 Download Software

- Juniper Vmx Qcow2 Download

- Qcow2 Format

- SRX Getting Started - Factory Reset 2020.12.31 How can I get Juniper Networks Product Icons, Visio Stencils, Logos, and photos of a Juniper device? 2020.12.31 NFX250 Password Recovery 2020.12.31 SRX Understanding how proxy IDs are generated in route-based and policy-based VPNs 2020.12.31 SRX How do interface and IP monitoring in a chassis cluster affect threshold and cause.

- Choose the junos.qcow2 file from the image folder to be used as HDA. Click finish, and we’ll edit the vMX-vCP vm: Assign vMX-vCP to the Router category, confirm it’s been assigned 2GB RAM, 1 vCPU, and that we’ll use Telnet. Next, we need to click on the HDD tab, since we must add two more files.

- The vMX is a full-featured, virtualized MX Series 3D Universal Edge Router. It helps you improve service agility, speed your time to market, and increase routing scale and performance. You can use it to create and centrally manage policy, gather and distribute threat intelligence, detect threats, and adapt and enforce policies in real time.

Are you studying for Juniper Certifications but don’t have access to physical hardware? No worries, you can now get Juniper Junos for GNS3 including vMX & vSRX fully tested and compatible with current version of GNS3.

Simply import the appliances and the images and fire up your home lab and start your preparations. Apart from these some important books on Juniper Routing, Switching & Security are also bundled for your reference and self study.

Download Juniper Junos for GNS3 here – https://i.srijit.com/JunOSGNS3

Feel free to drop a comment below in case of any further requirements or problems. Hope it helps!

These steps will help you install a Juniper vMX device on KVM. The steps assume you have already deployed a KVM host with all of the requirements that the vMX needs. If you did not already do this or you are deploying a new KVM host you can follow the instructions I have made here:

- CentOS 7.4 - I recommend using this as the Ubuntu support is patchy at best, the requirements for Ubuntu are also very old.

- Ubuntu 14.04 I highly recommend that you deploy the host using the above instructions before continuing - deploying the vMX on a host that does not have the exact configuration required can be a frustrating experience.

For my vMX installations I am using SR-IOV for best performance, so the guide assumes that the interfaces assigned to the vFP are all SR-IOV. You will need to adjust the instructions depending on the vMX release that you are installing, I have extracted the vMX files to /home/vMX-[RELEASE] (in this case /home/vMX-18.1R1) so that when I upgrade the vMX I can keep the old copies in an obvious place.

Extract Files

- Download a copy of the vMX .tar.gz file for KVM on the host. Since I am deploying the vMX release 18.1R1, the file name is

vmx-bundle-18.1R1.9.tgz. The file can be downloaded from the Juniper vMX release page. This guide assumes that you have the .tar.gz file in/home/. - Extract the tar.gz file. The files will be extracted to

vmx/- I recommend renaming this to the release as well.

Intel X710/XL710 Drivers - Ubuntu Only

Note: These steps only apply to Ubuntu 14.04 hosts. Do not do this for a CentOS host. This step also assumes that you are using an Intel X710 or XL710 NIC.

There are two drivers that need to be installed - Intel i40evf and Intel i40e. The Intel i40evf driver needs to be downloaded from the Intel website, the i40e driver is included with the vMX. The i40e driver included with the vMX is patched to make certain features work when using SR-IOV, things like 802.3ad frames will not be passed through to the vMX otherwise.

- Try installing the i40e driver first included with the vMX:

If you are trying to install a different release of the vMX, the drivers folder will most likely be different (eg. for 17.4R1 the i40e driver is located in

drivers/i40e-1.3.46/srcinstead).

Make sure that this is successful. You may get errors when compiling the driver, as an example I got this error when deploying the vMX release 17.4R1:

If you do get errors like this, make sure that the kernel version that is currently running is supported by Juniper - check the 'Minimum Hardware and Software Requirements' page for the vMX release you are deploying to see which kernel is required. If you have the required kernel already installed but not running you will need to set Grub to use the older kernel and reboot the host to apply before continuing. Do not continue until this step works.

- Download a copy of the Intel i40evf driver from Intel and extract it:

- Install the i40evf driver:

- Update the init image so that the new driver is included:

- Activate the new driver (alternatively you can reboot the host):

Remove default bridge network - Ubuntu Only

Note: These steps only apply to Ubuntu 14.04 hosts. Do not do this for a CentOS host. This step also assumes that you are using an Intel X710 or XL710 NIC.

Remove the default bridge network that libvirt creates, this can cause issues starting the VM's for the vMX:

Junos Vmx Qcow2 Download Windows 10

vMX Configuration File

The Juniper vMX configuration file needs to be created. The configuration file is in YAML format and defines what interfaces on the host to bind to the vMX and what resources the vMX will have. The Juniper vMX document from Juniper available here contains some sample configurations.

- Remove the default configuration file, its easier to copy the entire config from here and adjust as needed:

- Edit

vmx.confin your favourite editor. You will need to change a few values from the sample configuration file I have provided:- Update the

routing-engine-image,routing-engine-hddandforwarding-engine-imagepaths underHOST:to be the correct file locations - the RE engine image and vFPC image names may be different as well if you are using a release other than 18.1R1. - Set the appropriate

host-management-interface. This management interface is where the control plane and forwarding plane interfaces will be bound to to make the initial setup and troubleshooting more convenient. - Set the appropriate number of vCPU's for the control plane. For my case, 3 vCPU's is more than enough.

- Set the appropriate amount of RAM for the control plane. For my case 12GB is more than enough.

- Set the appropriate amount of vCPU's for the forwarding plane. There are specific requirements from Juniper for this (available here), make sure that you follow the requeirements for your use case. Since I am using performance mode with SR-IOV I have assigned 17 vCPU's.

- The number of vCPU's cannot be overcommitted. If you have a server with 24 logical CPU's (dual CPU, each CPU with 6 cores/12 threads for example) the maximum number of vCPU's you can allocate to both the control plane and forwarding plane cannot exceed 20 (4 CPU's must be left for the host).

- Set the correct IP details for the control plane and forwarding plane interfaces for management.

- The MAC adddresses in the configuration file can be any MAC addresses that you like - they must be unique though.

- Set the correct interfaces under

JUNOS_DEVICES:. These are the interfaces being assigned to the forwarding plane (revenue ports). All of the interfaces I am adding are 10G SR-IOV interfaces with the MTU set to 9400 (the maximum I can use). Thevirtual-functionshould not be changed unless you have specific requirements to do that.

- Update the

Here is the sample configuration you can use:

vMX Installation

The vMX can now be installed. The install process will also start the vCP and vFP VM's.

Run the installer with verbose logging just in case you run into issues:

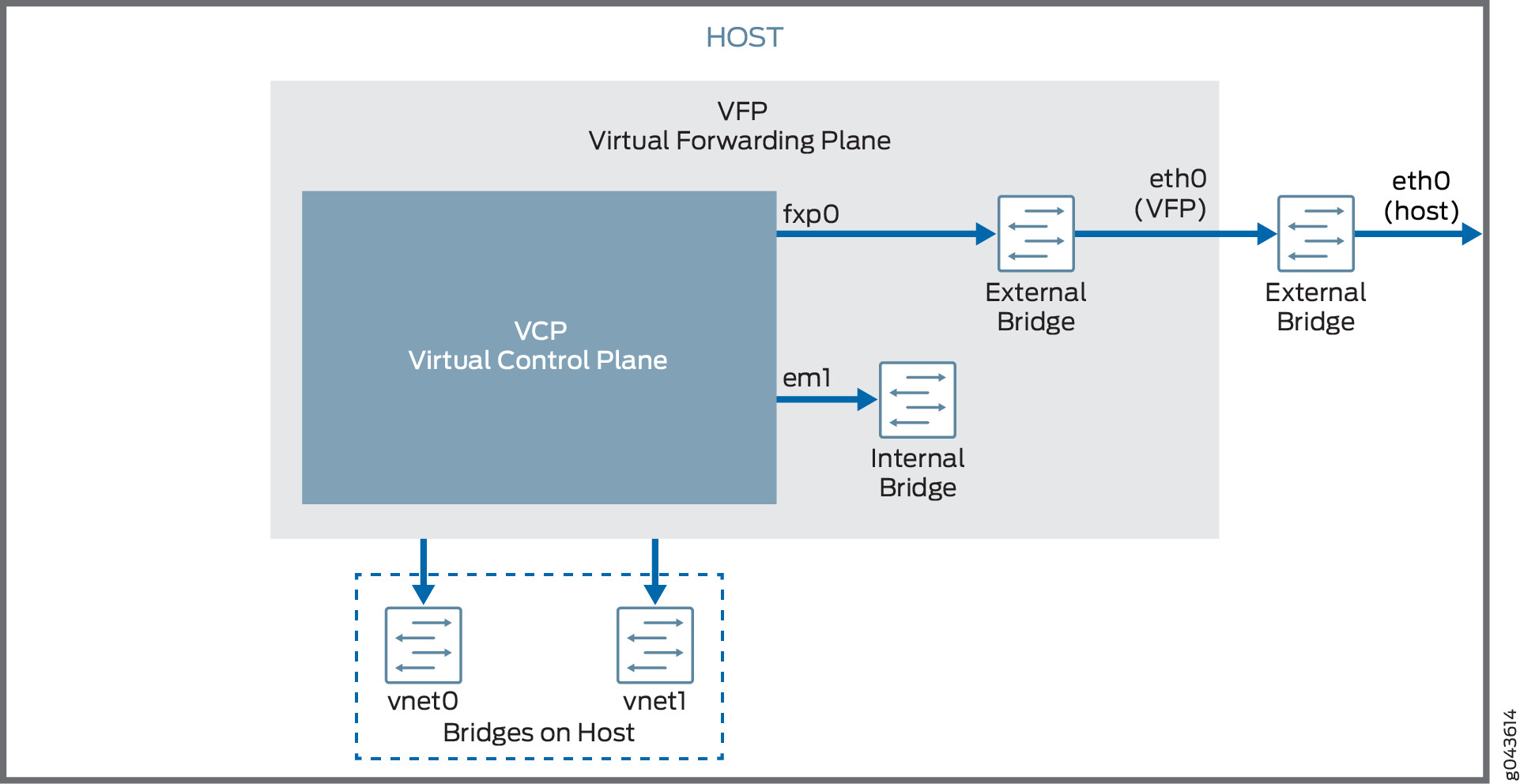

The vMX should start up, once the vmx.sh script is finished running you can then access the console of the vCP and vFP. The default username for the routing engine is root with no password. After the vCP finishes booting (it could take a couple of minutes) you should also be able to reach the management IP that is configured. The management IP uses the fxp0 interface.

After the VM is booted, log in to the console you can do the minimal configuration to get SSH access:

Junos Vmx Qcow2 Download Software

- Set the root password:

set system root-authentication plain - Enable SSH with root login:

set system services ssh root-login allow - Commit You can then SSH to the vCP management IP and continue the configuration. Using the console configuration will also work (the above 3 steps are not required) but there are issues if you are pasting in large amounts of configuration that are not present when using SSH. With release 18.1R1 I experienced an issue after doing the steps above where I lost reachability to the management IP (I couldn't ping or SSH to it anymore) - I had to stop and start the VM for that to work again.

I also recommend applying these settings:

- Apply the license to the VM. The default trial license will only allow 10mbit of throughput.

- Enable performance mode:

set chassis fpc 0 performance-mode - Set the number of ports for the FPC to the number of actual interfaces assigned to the vFP in the

vmx.conffile. Eg. if you have 6 interfaces assigned to the vFP:set chassis fpc 0 pic 0 number-of-ports 6 - Set the loopback device count to 1:

set chassis fpc 0 loopback-device-count 1

Starting on boot

To start the vMX on boot you will need to add either an init script (Ubuntu 14.04) or a systemd service (CentOS 7.4). I have included instructions on adding those and examples here.

Juniper Vmx Qcow2 Download

Further Reading

Qcow2 Format

I have some other guides available for enabling features as well as covering various errors that you may receive.